This is a shorter, more digestible version of my bachelors thesis intended mainly for my future self. If you are interested in the full paper or more information, feel free to contact me 😁.

Web Application Firewalls are critical for protecting web applications from cyber threats. With the rise of machine learning in WAFs, these systems are becoming more effective but also vulnerable to new types of attacks. My research specifically investigates the impact of transfer attacks on ML-based WAFs.

Machine Learning in WAFs: Traditional WAFs use static rules and assign “malicious scores” to web requests based on fixed criteria, often leading to limited adaptability. In contrast, ML-based WAFs leverage machine learning to dynamically classify requests as malicious or benign, enabling them to adapt to evolving threats and reduce false positives more effectively.

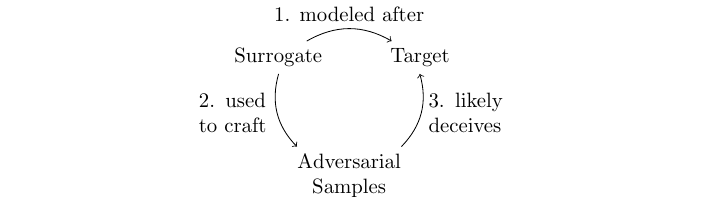

Transfer Attacks: These black-box attacks use surrogate models to create adversarial samples that deceive a target model without directly accessing it. The attacker builds a similar model and generates inputs that the target WAF misclassifies as benign.

To understand how vulnerable ML-based WAFs are to transfer attacks, this research utilized multiple ML-based WAF models and configurations, with each setup designed to test specific factors that may influence the success of these attacks.

In each experiment, for each developed surrogate model adversarial samples were crafted using the tool WAF-A-MoLE. This tool applies mutations to the input payloads reducing their “attack” confidence score assigned by the corresponding surrogate model without altering the semantic intent of the payload. These samples were then transferred to the target model to test its susceptibility. The experiments aimed to measure the Transfer Success Rate (TSR), representing how often adversarial samples generated on the surrogate model could deceive the target model.

The three primary experiments focused on:

The target and surrogate models are based of the MLModSec/AdvModSec models developed by Montaruli et al., which have some inherent limitations (specifically due to their unrealistic training data), rendering them not applicable for real-world deployment. Thus, the findings of this research should be viewed as an illustration of potential attack vectors that machine learning introduces into WAFs, rather than some concrete tutorial on how to attack a ML-based WAFs in real life.

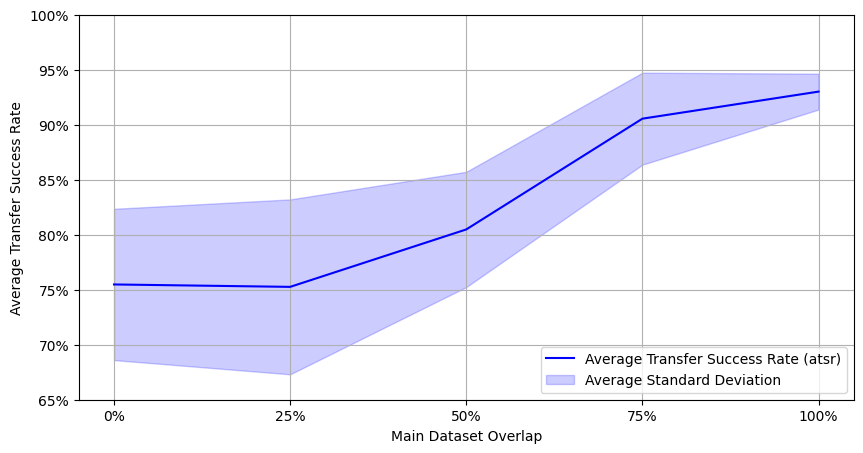

The first experiment aimed to understand how the similarity between the training and testing datasets of the surrogate and target models influenced the success of transfer attacks. The results show that the transfer success rate was significantly higher when the datasets were more similar, indicating that the overlap between the datasets is a critical factor in the success of transfer attacks.

The results also show that, while adversarial training can improve the robustness of the target model against transfer attacks, it may also inadvertently increase the success rate of the attacks. This suggests that adversarial training may come with trade-offs that need to be carefully considered in practice.

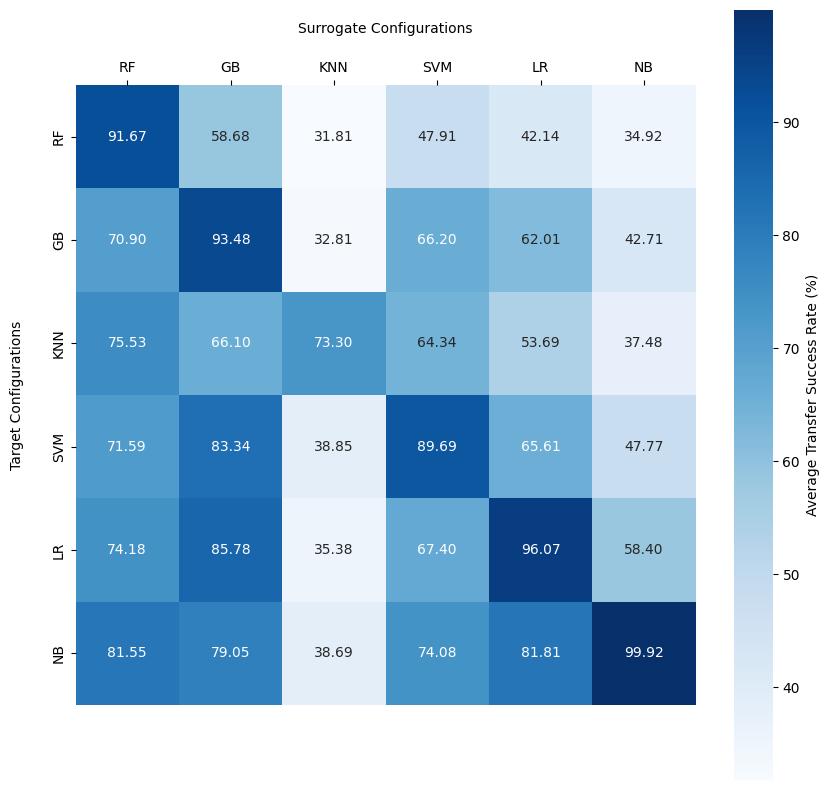

The second experiment investigated how the architecture of the surrogate and target models influenced the success of transfer attacks. The results show that the architecture of the models had a significant impact on the success of the attacks, with some architectures being more vulnerable to transfer attacks than others.

The results indicate that Random Forest models are more robust against transfer attacks compared to other architectures, but still show a high self-transfer success rate.

RF: Random Forest, GB: Gradient Boosting, KNN: K-Nearest Neighbors, SVM: Support Vector Machine, LR: Logistic Regression, NB: Naive Bayes

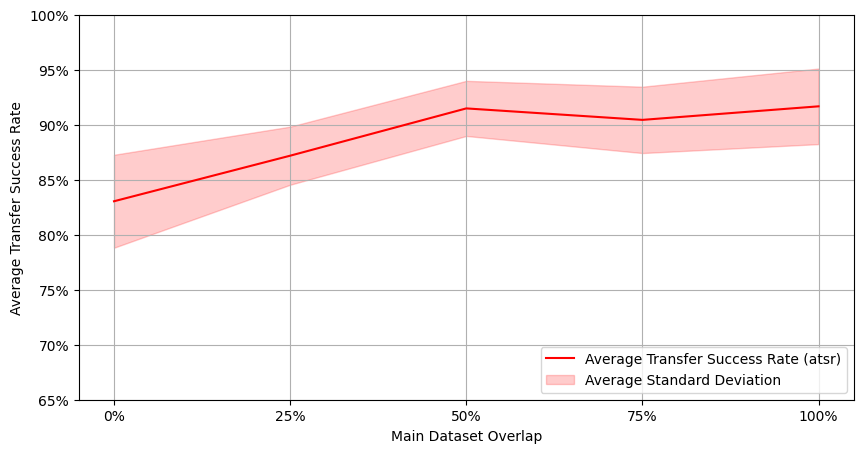

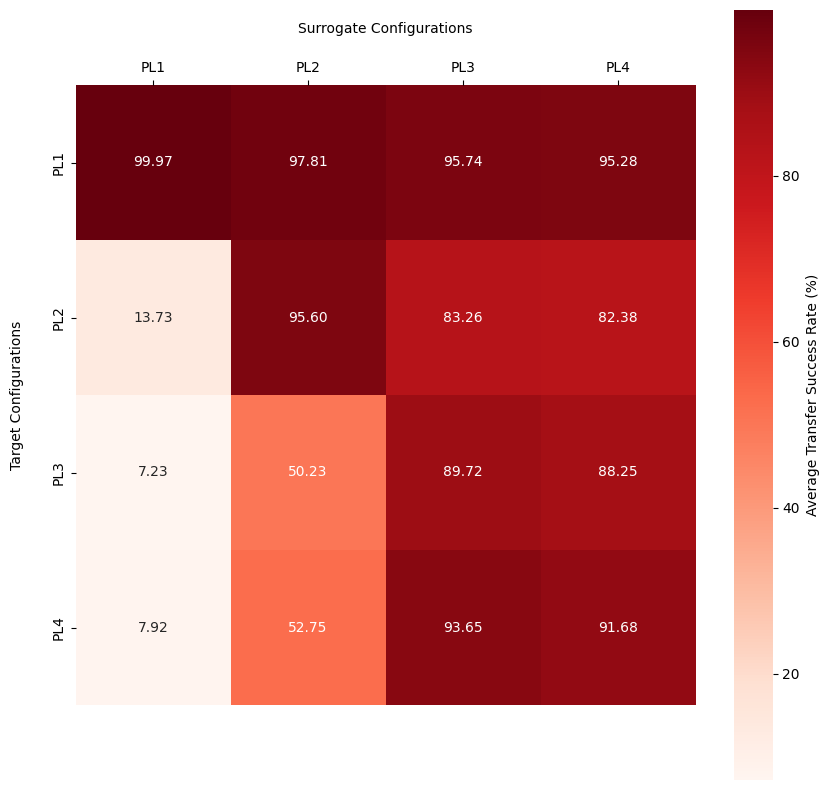

The third experiment focused on how the paranoia levels of the target model influenced the success of transfer attacks.

Explaining the concepts of paranoia levels in detail is beyond the scope of this summary, but in short, the paranoia level represents the number of rules considered in the feature extraction process. The higher the paranoia level, the more rules are considered, leading to a more complex feature space.

Putting this into simple terms, the higher the paranoia level, the more information the actual ml classifier has to work with, which in turn should theoretically make it harder for the attacker to craft adversarial samples that deceive the model.

The results show that the paranoia level of the target model had a significant impact on the success of transfer attacks. Generally speaking, the higher the paranoia level of the target models, the lower the success rate of the attacks, indicating that more complex feature spaces make it harder for attackers to deceive the model.

However, the results also show a small increase of transfer success rate from PL 3 to PL 4. In this setup PL 4 adds a “high impact” rule to the feature extraction process. This rule is often triggered by attacks and not for benign inputs and thus the models are trained to rely heavily on this rule to make decisions. Thus payloads that are adversarially crafted to avoid triggering this rule are more likely to be misclassified as benign.

The main takeaways from this research are:

Thanks for reading! You can find the source code for the experiments here.