The agent testing autonomously testing a registration form.

The agent testing autonomously testing a registration form.This tutorial will explain how I created the “InteractionAgent” module of Eyegee-Exec and how you can use it to create your own LLM-guided Web Testing Agent. This agent is capable of autonomously generating test scenarios for web applications and executing them using Selenium.

The agent testing autonomously testing a registration form.

The agent testing autonomously testing a registration form.

A LLM-guided Web Testing Agent can be a powerful tool for automating the testing of web applications. In the case of Eyegee-Exec, I use this type of agent to autonomously spider a website, map its interactions, and generate test scenarios for each interaction. This allows me to quickly test the functionality of a web application, identify outgoing API calls and getting a sense of the application’s structure.

I believe that in the future, agents like this will be a standard tool for web developers and testers. They will be able to automate some of the testing process, providing a foundation for more advanced testing requiring human intuition and creativity. This tool is a attempt or “proof of concept” of this idea.

I hope this tutorial will be useful to you or just give you a sense of what is possible with LangGraph and Selenium 😅.

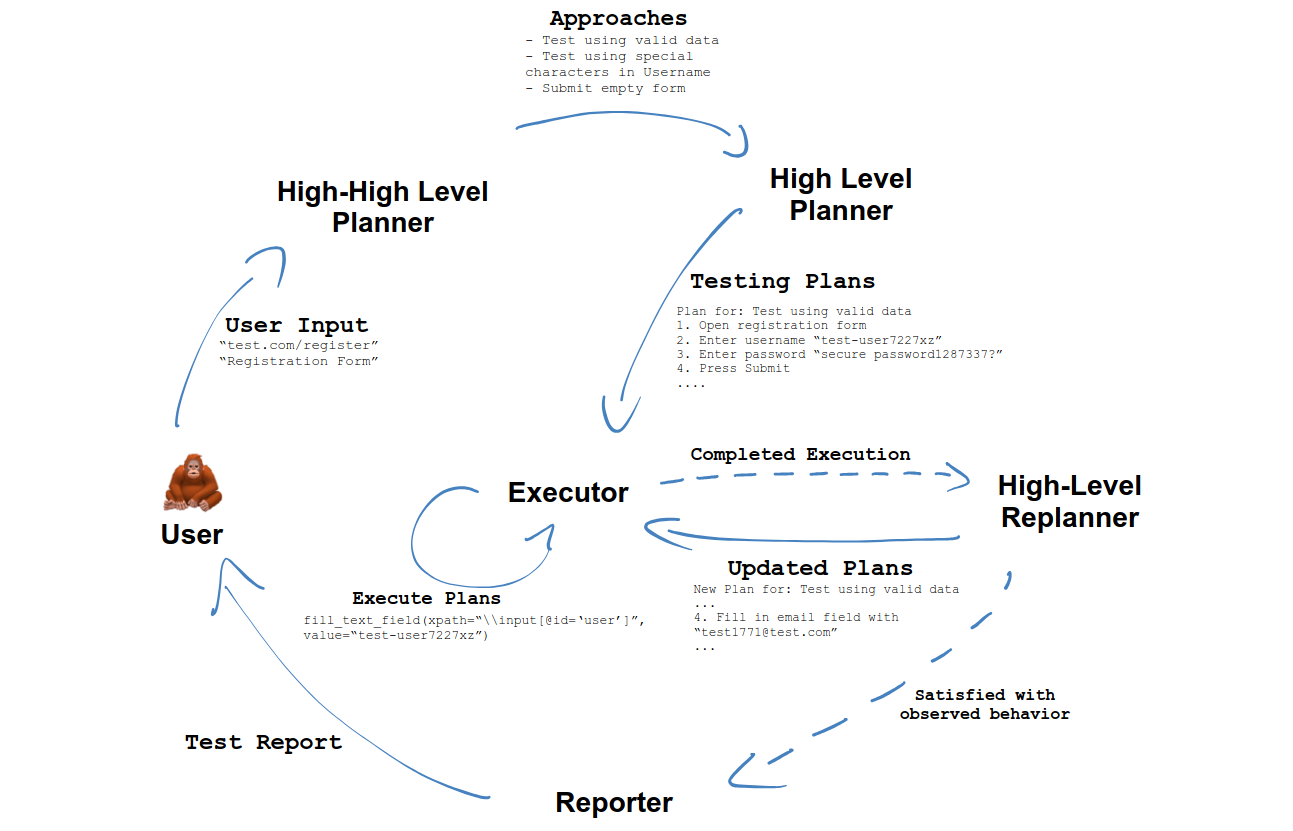

I have tried a few different architectures for this agent. The one that I found most reliable/performant is the one shown below.

Overview of the agent’s architecture.

Overview of the agent’s architecture.

The High-High Level Planner accepts the interaction to test (as a string) and the URL, generating a list of concise approaches that represent different test scenarios. These approaches are abstract guides for the agent; for example, “Test the search field with a valid query” or “Test the search field with special characters”.

For each approach, the High Level Planner creates a detailed list of actions specifying the exact steps to execute. For instance, for “Test the search field with a valid query” the actions might be “Open the search field” “Type ‘test’ in the search field” and “Click the search button”.

The Executor takes these actions and performs them using Selenium. Based on the prebuilt LangGraph ReAct Agent, it can execute tool calls and adjust input parameters based on results. The tools are essentially Selenium commands, and the input parameters are the elements to interact with.

The High Level Replanner analyzes the initial plan and the Executor’s observed behavior, adjusting the plan if errors or unforeseen behaviors occur. For example, if a form introduces a second step with new inputs not initially considered, the Replanner updates the plan to include them. If satisfied with the execution, it proceeds to the final component.

The Reporter generates a report of the executed test scenarios, detailing the results of each action and any errors encountered during execution.

Of course, you having these many components is not necessary for a simple agent. However, in my experience, having these many abstraction layers makes the agent easier to maintain and less prone to hallucinations.

I recommend using using the code in the Repo to follow along with this tutorial. Since this system is quite complex, I will not be able to cover every detail in this tutorial. However, I will try to explain the most important parts.

For this tutorial, you will need to have Python installed on your machine. You will also need to install the following libraries:

selenium

langchain

langchain-openai

langchain-anthropic

langgraph

pydantic

python-dotenv

bs4

richKeep in mind that you will need to have a WebDriver installed for your browser of choice. For this tutorial, I will be using the Chrome WebDriver.

You will also need to create a .env file in the root of your project with either a ANTHROPIC_API_KEY or OPENAI_API_KEY variable.

ANTHROPIC_API_KEY=your_api_key

# or

OPENAI_API_KEY=your_api_keyNext, the config.py file contains the main configuration for the agent. Here you can set the specific model to use and the browser to use.

class Config:

def __init__(self, website: str) -> None:

load_dotenv()

####### Selenium #######

self.chromedriver_path = "/usr/bin/chromedriver"

self.service = ChromeService(executable_path=self.chromedriver_path)

self.options = ChromeOptions()

self.options.add_argument("--lang=en")

self.options.add_experimental_option("perfLoggingPrefs", {"enableNetwork": True})

self.options.set_capability("goog:loggingPrefs", {"performance": "ALL"})

self.driver = webdriver.Chrome(service=self.service, options=self.options)

self.selenium_rate = 0.5

####### Model #######

# self.model = ChatOpenAI(model="gpt-4o-mini", temperature=0.2)

# self.advanced_model = ChatOpenAI(model="gpt-4o-mini", temperature=0.2)

self.model = ChatAnthropic(model_name="claude-3-5-haiku-latest", temperature=0.2)

self.advanced_model = ChatAnthropic(

model_name="claude-3-5-haiku-latest", temperature=0.2

)

self.parser = StrOutputParser()

####### Target #######

try:

parsed_url = urlparse(website)

self.target = f"{parsed_url.scheme}://{parsed_url.netloc}"

self.initial_path = parsed_url.path

except Exception as e:

logger.error(f"Error parsing target URL: {e}")

exit(1)The selenium options are set to log the performance of the browser, which allows us to capture network requests and responses. The selenium_rate variable is the time in seconds to wait between each Selenium command.

The model and advanced_model variables are the models used for the agent. In this case, I am using the claude-3-5-haiku-latest model from Anthropic. I added the advanced_model variable to allow for a better model for the report generation.

The following functions define the components of the agent. Please take a look at the src/interaction_agent/agent.py file for the full implementation. I will only cover the most important parts here, omitting some details for brevity.

The State class is a Pydantic model that represents the state of the agent at any given time. Each component of the agent can access the state. The return value of each component is a dictionary that updates the state.

class State(TypedDict):

uri: str # The URI of the page to test

interaction: str # The interaction to test

page_soup: str # The HTML of the page

limit: str # The limit of approaches to generate

approaches: List[str] # The approaches to test (populated by the high-high level planner)

plans: List[PlanModel] # The plans to execute (populated by the high level planner)

tests: Annotated[List[TestModel], operator.add] # The observed behavior (populated by the executor)

report: str # The report of the test (populated by the reporter)The state is passed to the high_high_level_planner_step function, which generates a list of approaches based on the interaction and the page’s HTML.

def high_high_level_planner_step(state: State):

approaches = high_high_level_planner.invoke(

{

"uri": state["uri"],

"interaction": state["interaction"],

"page_soup": state["page_soup"],

"limit": state["limit"],

}

)

# adjust length if above limit

if len(approaches.approaches) > int(state["limit"]):

approaches.approaches = approaches.approaches[: int(state["limit"])]

return {"approaches": approaches.approaches}The high-high level planner is a simple function that uses the LangGraph model to generate approaches based on the interaction and the page’s HTML.

high_high_level_planner = high_high_level_planner_prompt | self.cf.model.with_structured_output(HighHighLevelPlan)Key here is the .with_structured_output(HighHighLevelPlan) method, which allows us to define the output structure of the model.

In this case the HighHighLevelPlan is a Pydantic model that represents the output of the high-high level planner.

class HighHighLevelPlan(BaseModel):

"""High-level plan for testing an interaction feature."""

approaches: List[str] = Field(

description="Different approaches to test an interaction feature, should be in sorted order. Pay attention to the specified limit of approaches."

)in src.interaction_agent.classes.py

The high_level_plan_step function generates a list of plans based on the approaches generated by the high-high level planner.

def high_level_plan_step(state: State):

plans = []

for i, approach in enumerate(state["approaches"]):

plan = high_level_planner.invoke(

{

"uri": state["uri"],

"interaction": state["interaction"],

"page_soup": state["page_soup"],

"approach": approach,

"interaction_context": format_context(state["interaction_context"]),

}

)

plans.append(plan)

return {"plans": plans}Similar to the high-high level planner, the high level planner is a simple function that uses the LangGraph model to generate plans based on the approach and the page’s HTML.

Again, the key here is the .with_structured_output(HighLevelPlan) method, which allows us to define the output structure of the model.

The execute_step function essentially just sets up the environment to execute the plans generated by the high level planner and then stores the observations in the state.

The execute_step function executes the plans generated by the high level planner. This is by far the most complex part of the agent, due to the additional functionality required to parse outgoing requests and so on.

If we would only want to be executing the plans and gather the observations (without the additional functionality), we could use a more reduced executor like this:

def execute_step(state: State):

tests = []

uri = state["uri"]

plans = state["plans"]

# Execute each plan

for i, plan in enumerate(state["plans"]):

context = ToolContext(cf=self.cf, initial_uri=uri) # Create a new context for each test

tools = self._init_tools(context)

solver = create_react_agent(

self.cf.model, tools=tools, prompt=react_agent_prompt, output_parser=JSONAgentOutputParser()

)

solver_executor = AgentExecutor(agent=solver, tools=tools)

soup_before = filter_html(BeautifulSoup(self.cf.driver.page_source, "html.parser"))

test = TestModel(

approach=plan.approach, steps=[], soup_before_str=soup_before.prettify(), plan=plan

)

self.cf.driver.get(f"{self.cf.target}{uri}")

time.sleep(self.cf.selenium_rate)

for j, task in enumerate(plan.plan):

completed_task = CompletedTask(task=task)

try:

solved_state = solver_executor.invoke(

{

"task": task,

"interaction": state["interaction"],

"page_soup": state["page_soup"],

"approach": plan.approach,

"plan_str": "\n".join(plan.plan),

}

)

completed_task.status = solved_state["output"]["status"]

completed_task.result = solved_state["output"]["result"]

except Exception as e:

completed_task.status = "error"

completed_task.result = str(e)

completed_task.tool_history = context.get_tool_history_reset()

test.steps.append(completed_task)

# getting page source

test.soup_after_str = filter_html(BeautifulSoup(self.cf.driver.page_source, "html.parser")).prettify()

tests.append(test)

return {

"tests": state["tests"] + tests,

"plans": [],

}This function uses the create_react_agent function to create a ReAct agent that executes the plans. The AgentExecutor class is used to execute the agent and gather the observations, which are then stored in the tests variable.

The ToolContext object is used to store the state and observations of each tool call. This is necessary to be able to save information in a tool call and be able to pass the information to the Replanner.

Each test is a TestModel object, just containing information about the plan execution.

class TestModel(BaseModel):

"""Model for representing a test for a single approach."""

approach: str = Field(description="The approach for the interaction feature.")

plan: PlanModel = Field(description="The plan for this approach.")

steps: List[CompletedTask] = Field(description="The steps executed for this approach.")

soup_before_str: str = Field(description="The soup before the test.")

soup_after_str: str = Field(default=None, description="The soup after the test.")

outgoing_requests_after: List[ApiModel] = Field(default=None, description="The outgoing requests after the test.")

checked: bool = Field(default=False, description="Whether the test has been checked for this approach.")

in_report: bool = Field(default=False, description="Whether the test is in the final report.")After executing the plans, the agent returns the updated state with the observations stored in the tests variable.

The replanner_step function analyzes the initial plan and the observed behavior, adjusting the plan if errors or unforeseen behaviors occur. It essentially just formats the observations and the plan into a string, which is then passed to a LLM. Depending on the output of the LLM, the plan is adjusted and the Executor is called again, or all plans are considered executed and the agent proceeds to the final component.

def high_level_replan_step(state: State):

"""

Loops over all tests in current state and decides if a new plan is needed for each test.

"""

new_plans = []

tests = state["tests"]

tests_to_check = [test for test in tests if not test.checked]

tests_checked = [test for test in tests if test.checked]

for i, test in enumerate(tests_to_check):

uri = state["uri"]

interaction = state["interaction"]

page_source_diff = unified_diff(

test.soup_before_str.splitlines(),

test.soup_after_str.splitlines(),

lineterm="",

)

page_source_diff = "\n".join(list(page_source_diff)).strip()

input = {

"uri": uri,

"interaction": interaction,

"approach": test.approach,

"previous_plan": "\n".join([f"- {step}" for step in test.plan.plan]),

"steps": format_steps(test.steps),

"outgoing_requests": api_models_to_str(test.outgoing_requests_after),

"page_source_diff": page_source_diff,

}

decision = high_level_replanner.invoke(input=input)

if isinstance(decision.action, PlanModel):

test.checked = True

test.in_report = False

tests_checked.append(test)

new_plans.append(PlanModel(approach=test.approach, plan=decision.action.plan))

elif isinstance(decision.action, Response):

test.checked = True

test.in_report = True

tests_checked.append(test)

return {"tests": tests_checked, "plans": new_plans}The high_level_replanner is a simple function that uses the LangGraph model to generate a new plan based on the observations and the initial plan.

high_level_replanner = high_level_replanner_prompt | self.cf.model.with_structured_output(Act)Key here is the .with_structured_output(Act) method, which allows us to define the output structure of the model.

In this case the Act is a Pydantic model that represents the output of the high level replanner.

class Act(BaseModel):

"""Action to perform."""

action: Union[Response, PlanModel] = Field(

description="Action to perform. If you want to respond to user, send a Response text. \n"

"If you would like to provide a new, updated plan, use PlanModel."

)in src.interaction_agent.classes.py

The Union type allows us to define multiple possible outputs for the model, telling the LLM to either return a new plan or a response to the user.

The report_step function again is pretty straightforward. It essentially again just formats the observations and the plan into a prompt, which is then passed to a LLM to generate a report. Additionally, the the LLM decides if any new interaction_context is needed. This allows the LLM to decide what important information to keep for the next interact call and include in their planning process (e.g. valid credentials from a registration form interaction could be passed to other interactions).

def report_step(state: State):

interaction = json.dumps(state["interaction"], indent=4)

uri = state["uri"]

messages = [

(

"system",

system_reporter_prompt.format(interaction=interaction, uri=uri)

.replace("{", "{{")

.replace("}", "}}"),

)

]

tests_to_report = [test for test in state["tests"] if test.in_report]

for test in tests_to_report:

steps = format_steps(test.steps)

page_source_diff = unified_diff(

test.soup_before_str.splitlines(),

test.soup_after_str.splitlines(),

lineterm="",

)

page_source_diff = "\n".join(list(page_source_diff)).strip()

this_human_reporter_prompt = human_reporter_prompt.format(

approach=test.approach,

plan="\n".join(test.plan.plan),

outgoing_requests=api_models_to_str(test.outgoing_requests_after),

page_source_diff=page_source_diff,

steps=steps,

)

messages.append(("user", this_human_reporter_prompt.replace("{", "{{").replace("}", "}}")))

messages.append(("placeholder", "{messages}"))

reporter_prompt = ChatPromptTemplate.from_messages(messages)

reporter = reporter_prompt | self.cf.advanced_model.with_structured_output(ReporterOutput)

out = reporter.invoke(input={})

return {"report": out.report, "new_interaction_context": out.new_interaction_context}The _init_app function initializes the agent, defining the workflow and the components of the agent.

def _init_app(self):

def should_report(state: State) -> Literal["executer", "reporter"]:

if "plans" in state and state["plans"] == []:

return "reporter"

else:

return "executer"

# SNIP: define all the functions mentioned above here

workflow = StateGraph(State)

workflow.add_node("high_high_level_planner", high_high_level_planner_step)

workflow.add_node("high_level_planner", high_level_plan_step)

workflow.add_node("executer", execute_step)

workflow.add_node("high_level_replanner", high_level_replan_step)

workflow.add_node("reporter", report_step)

workflow.add_edge(START, "high_high_level_planner")

workflow.add_edge("high_high_level_planner", "high_level_planner")

workflow.add_edge("high_level_planner", "executer")

workflow.add_edge("executer", "high_level_replanner")

workflow.add_conditional_edges("high_level_replanner", should_report)

workflow.add_edge("reporter", END)

app = workflow.compile()

return appWe can then run the agent by calling the invoke method on the app.

final_state = self.app.invoke(

input={

"interaction": interaction,

"uri": uri,

"page_soup": soup,

"limit": limit,

"interaction_context": interaction_context,

}

)The ouput of the agent is the final state, which contains the report of the executed test scenarios, observed URIs API requests and information to be passed to further interactions.

If you use the code in the Repo you can run the agent by calling the demo.py file.

from config import Config

from src.interaction_agent.agent import InteractionAgent

from src.llm.api_parser import LLM_ApiParser

target = "https://demoqa.com/webtables"

print()

print("Starting interaction agent demo...")

print()

config = Config(target)

agent = InteractionAgent(cf=config, llm_page_request_parser=LLM_ApiParser(config))

agent.interact(uri="/webtables", interaction="Coworker Table", limit="1")I hope this tutorial has given you a good overview of how to create a LLM-guided Web Testing Agent with LangGraph and Selenium. This agent is capable of autonomously generating test scenarios for web applications and executing them using Selenium.

Feel free to check out Eyegee-Exec for a more complete implementation of this agent. Here is a Video Demo of Eyegee-Exec in action. If you have any questions or feedback, feel free to reach out to me 😄.